Docker in Unprivileged LXC on a Debian 11 Host

On Linux, LXC and Docker are two different takes on containerization. I won't dwell on the terminology too much here as many other sites explain how they work in detail. Essentially, LXC focuses on OS-level containerization (like a virtual machine, but with a shared kernel), while Docker focuses on containerizing individual apps.

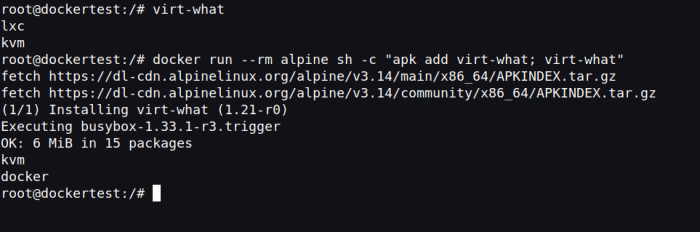

In newer Linux setups, it is possible to mix the two, i.e. running Docker inside a LXC container. In particular, this can be set up in a way that supports unprivileged LXC containers, which remap all user accounts inside the container (including root!) to unprivileged users on the host, reducing the impact of potential container escapes. Docker also supports user remapping by itself, though it isn't enabled by default as of writing.

You might wonder: what's the point of nesting containers? Essentially, doing so lets you work with app-level containers inside an isolated environment of its own. This could be useful if you use orchestration tools, and don't want to add more moving parts to your host system or deal with the overhead of a full virtual machine. In my case, I found container nesting useful because it allows me to run community-maintained infrastructure as an isolated slice inside a bigger machine. Normally, Docker access is root-equivalent, and rootless mode wasn't too appealing either as I'd have to either tinker with sudo settings (to access the Docker account) or use a single shared user for maintenance (forgoing fine-grained access control).

However, I ran into one big pain point trying to set this up. Just about every guide for Docker inside unprivileged LXC assumes you're using Proxmox VE! Indeed, setting this up in Proxmox is easy - you just have to add two options (features: keyctl=1,nesting=1), and things pretty much work out of the box. For everyone else though, there's a lot of misleading / outdated advice suggesting that this isn't possible, or that you need to use a privileged LXC container instead (which removes any security benefits of LXC). Surely, if Proxmox can make this work, so can any other Linux host!

Attempt 1: Docker in a Debian 11 LXC guest

First, I had to configure my system for unprivileged LXC. There are a few steps involved to this:

-

Installing a LXC CLI. I used the classic LXC interface (

apt install lxc), which provides commands likelxc-start,lxc-attach, etc. Note that this is NOT Ubuntu's lxd, which confusingly uses a CLI binary named...lxc, with entirely different commands. -

Creating a UID mapping in

/etc/subuidand/etc/subgidfor root, or whichever user you want to run containers as. Then, you can add this to/etc/lxc/default.confto have it apply for all new containers. The Arch Wiki has a good example: https://wiki.archlinux.org/title/Linux_Containers#Enable_support_to_run_unprivileged_containers_(optional) -

Creating the actual container (I used the name

dockertest). I created mine as root to have system-wide unprivileged containers separate from my regular account, but this shouldn't have any major impact besides changing where the containers are stored:

$ sudo DOWNLOAD_KEYSERVER=keys.openpgp.org lxc-create -t download dockertestAfter that, I edited my container config to look something like this:

lxc.include = /usr/share/lxc/config/common.conf

lxc.include = /usr/share/lxc/config/userns.conf

lxc.arch = linux64

# idmap options should match step 2

lxc.idmap = u 0 100000 65536

lxc.idmap = g 0 100000 65536

lxc.rootfs.path = dir:/var/lib/lxc/dockertest/rootfs

lxc.uts.name = dockertest

# networking config is out of the scope for this article, but you might find https://wiki.debian.org/LXC/SimpleBridge useful

lxc.net.0.type = veth

lxc.net.0.link = lxcbr0

lxc.net.0.flags = up

lxc.apparmor.profile = generated

lxc.apparmor.allow_nesting = 1Then you can start the container using sudo lxc-start -n dockertest, and shell in using sudo lxc-attach -n dockertest (the latter is also arguably more convenient than bootstrapping logins for a virtual machine).

- Installing Docker inside the container (

apt install docker.io)

Now I tried to run a container, and...

root@dockertest:/# docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

2db29710123e: Pull complete

Digest: sha256:37a0b92b08d4919615c3ee023f7ddb068d12b8387475d64c622ac30f45c29c51

Status: Downloaded newer image for hello-world:latest

docker: Error response from daemon: OCI runtime create failed: container_linux.go:367: starting container process caused: process_linux.go:495: container init caused: process_linux.go:458: setting cgroup config for procHooks process caused: can't load program: operation not permitted: unknown.

ERRO[0002] error waiting for container: context canceledNo dice!

Turns out, this is likely an issue related to Docker's support for CGroups v2, which was first added in the 20.10 branch. Debian 11 ships with Docker v20.10.5, and enables CGroups v2 by default. One option could be to revert to CGroups v1, but upgrading Docker from through their Apt repository worked for me as well. This was suggested on the Proxmox forums.

Attempt 2: a newer Docker version

I upgraded to the latest Docker version (20.10.9 as of writing) and tried again:

root@dockertest:/# docker run hello-world

Hello from Docker!

This message shows that your installation appears to be working correctly.

...It runs! However, running docker info shows that Docker is running using vfs as the storage backend, which is highly inefficient. To quote the Docker website:

The

vfsstorage driver is intended for testing purposes, and for situations where no copy-on-write filesystem can be used. Performance of this storage driver is poor, and is not generally recommended for production use.

Because vfs uses copies for layers, disk space usage can also balloon dramatically: see e.g. https://github.com/mailcow/mailcow-dockerized/issues/840#issuecomment-354292076. This would've definitely became an issue in my setup!

# docker info

Client:

Context: default

Debug Mode: false

Plugins:

app: Docker App (Docker Inc., v0.9.1-beta3)

buildx: Build with BuildKit (Docker Inc., v0.6.3-docker)

scan: Docker Scan (Docker Inc., v0.8.0)

Server:

Containers: 2

Running: 0

Paused: 0

Stopped: 2

Images: 1

Server Version: 20.10.9

Storage Driver: vfs

...Attempt 3: faster storage with overlayfs

Following the Docker guide for storage drivers, I tried switching to overlay2. I created a /etc/docker/daemon.json containing:

{

"storage-driver": "overlay2"

}...And, this made Docker fail to start.

Oct 23 06:07:11 dockertest dockerd[7988]: time="2021-10-23T06:07:11.946239710Z" level=error msg="failed to mount overlay: operation not permitted" storage-driver=overlay2

Oct 23 06:07:11 dockertest dockerd[7988]: failed to start daemon: error initializing graphdriver: driver not supportedI did some more digging and found that OverlayFS mounts for unprivileged users are a Ubuntu specific feature! This was done using a kernel patch, which unfortunately brought about its own problems early on - see CVE-2015-1328.

However, unprivileged OverlayFS mounts did make its way into upstream Linux in 5.11. But for the time being, Debian 11 is stuck on kernel 5.10 (unless you use backports, but then the trade-off becomes slower security support).

Attempt 4: faster storage with fuse-overlayfs

I was about to give up with this experiment until I discovered another option: fuse-overlayfs. As the name suggests, it's a wrapper around OverlayFS using FUSE, originally designed for rootless Docker (and podman too!). According to the LXD folks, FUSE is namespace-aware and thus safe for unprivileged containers.

To try this, I installed fuse-overlayfs on both the LXC host and guest (apt install fuse-overlayfs), and set /etc/docker/daemon.json to use that driver:

{

"storage-driver": "fuse-overlayfs"

}Now Docker actually starts, but trying to start containers gives a different error:

root@dockertest:/# docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

2db29710123e: Pull complete

Digest: sha256:37a0b92b08d4919615c3ee023f7ddb068d12b8387475d64c622ac30f45c29c51

Status: Downloaded newer image for hello-world:latest

docker: Error response from daemon: using mount program fuse-overlayfs: fuse: device not found, try 'modprobe fuse' first

fuse-overlayfs: cannot mount: No such file or directory

: exit status 1.

See 'docker run --help'.This error message is a bit confusing, as modprobe inside the guest will not work, and modprobe on the host does not appear to change a thing. Turns out, this error occurs because /dev/fuse is not passed into the LXC guest!

The solution, sourced from this GitHub comment, is essentially bind-mounting /dev/fuse into the container, by adding to the LXC container config:

lxc.mount.entry = /dev/fuse dev/fuse none bind,create=file,rw,uid=100000,gid=100000 0 0The UID and GID should be equal to the root account inside the container, i.e. whatever user 0 maps to in your idmap. e.g. a uidmap of u 0 100000 65536 means you should use uid=100000.

After restarting the LXC guest:

root@dockertest:/# docker run hello-world

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/You now have working Docker inside unprivileged LXC, with reasonably performant storage too!

TL;DR

Getting Docker to run inside a Debian 11 LXC host + unprivileged LXC guest required:

- Configuring unprivileged LXC: creating an idmap, and adding

lxc.apparmor.profile = generated, andlxc.apparmor.allow_nesting = 1to the container options - Installing Docker >= 20.10.7 from their Apt repository

- Installing

fuse-overlayfson the host and guest, and using it as the Docker storage driver - Bind-mounting

/dev/fuseinto the LXC guest

Alternatively, you may be able to skip the fuse-overlayfs parts by upgrading to Linux >= 5.11, but I have not tested this yet.

Aside: how does Proxmox get this to work?

If I had to guess, the keyctl and nesting options needed for Docker support are related to AppArmor. However, another subtle difference is that although Proxmox VE is based off Debian, they use a custom kernel.

And that custom kernel pulls from Ubuntu, so it inherits all of their magic patches for containers! 😄